Saman Tabatabaeian

Technical Director -- Cloud, DevOps & Platform Engineering

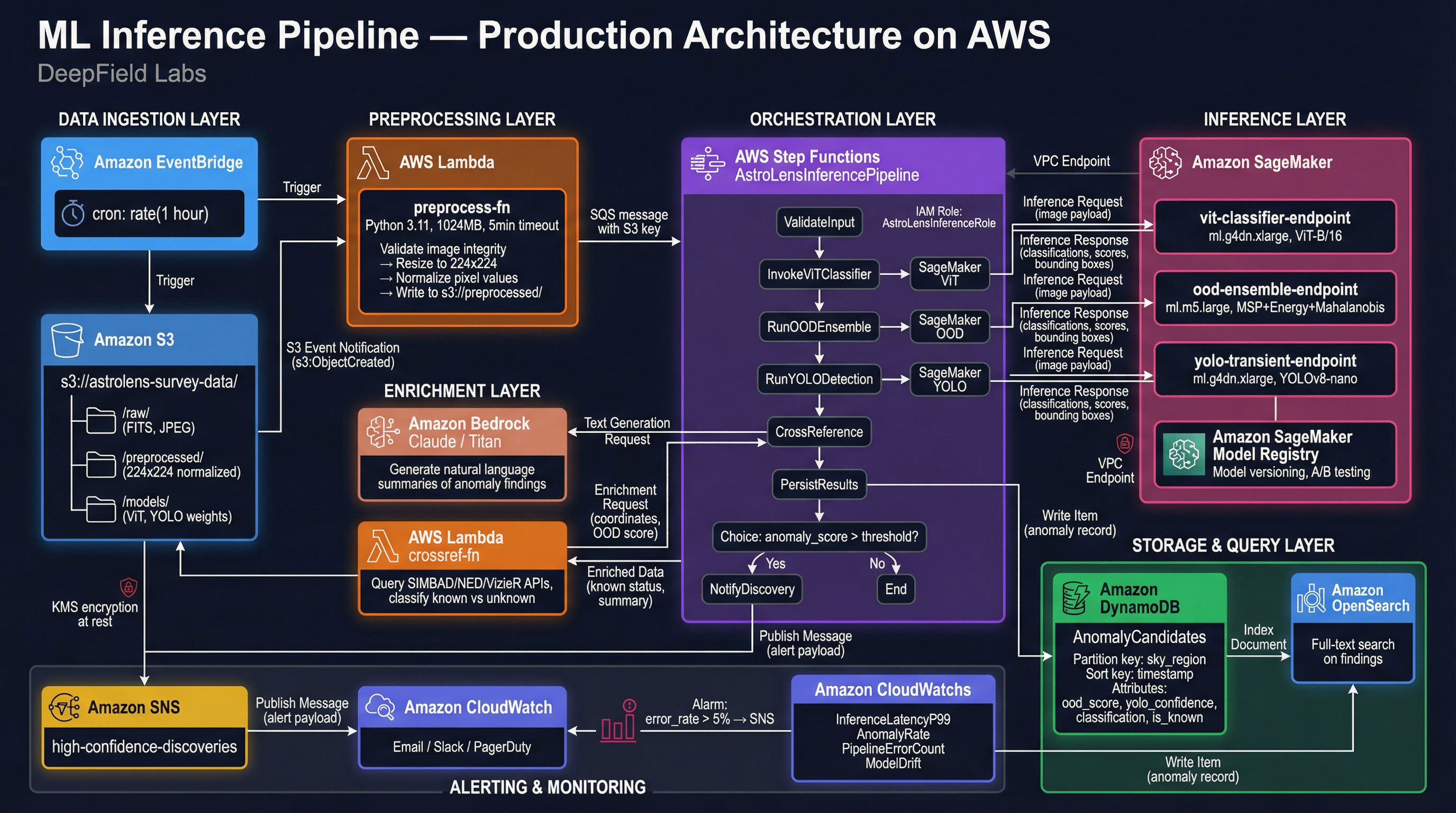

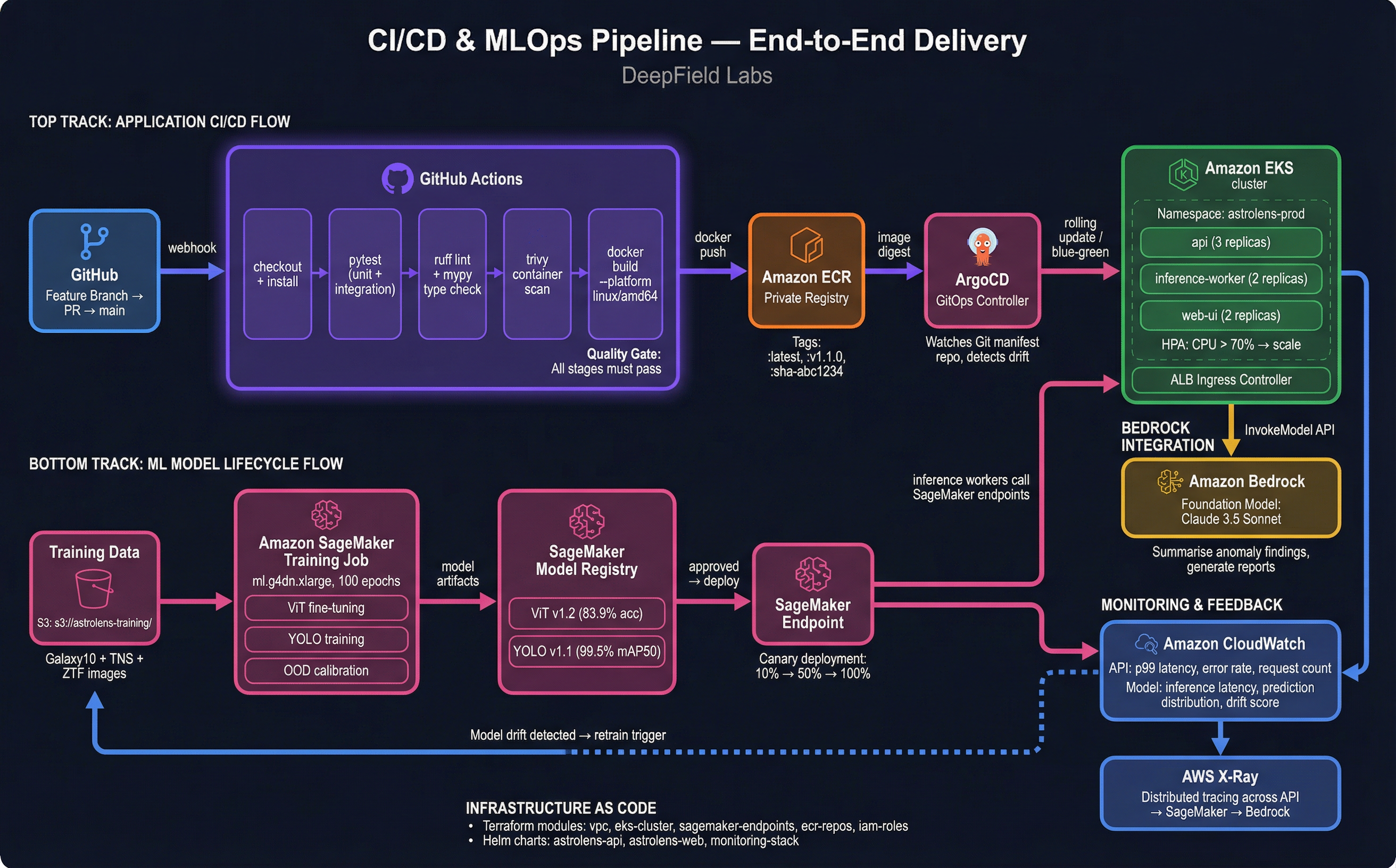

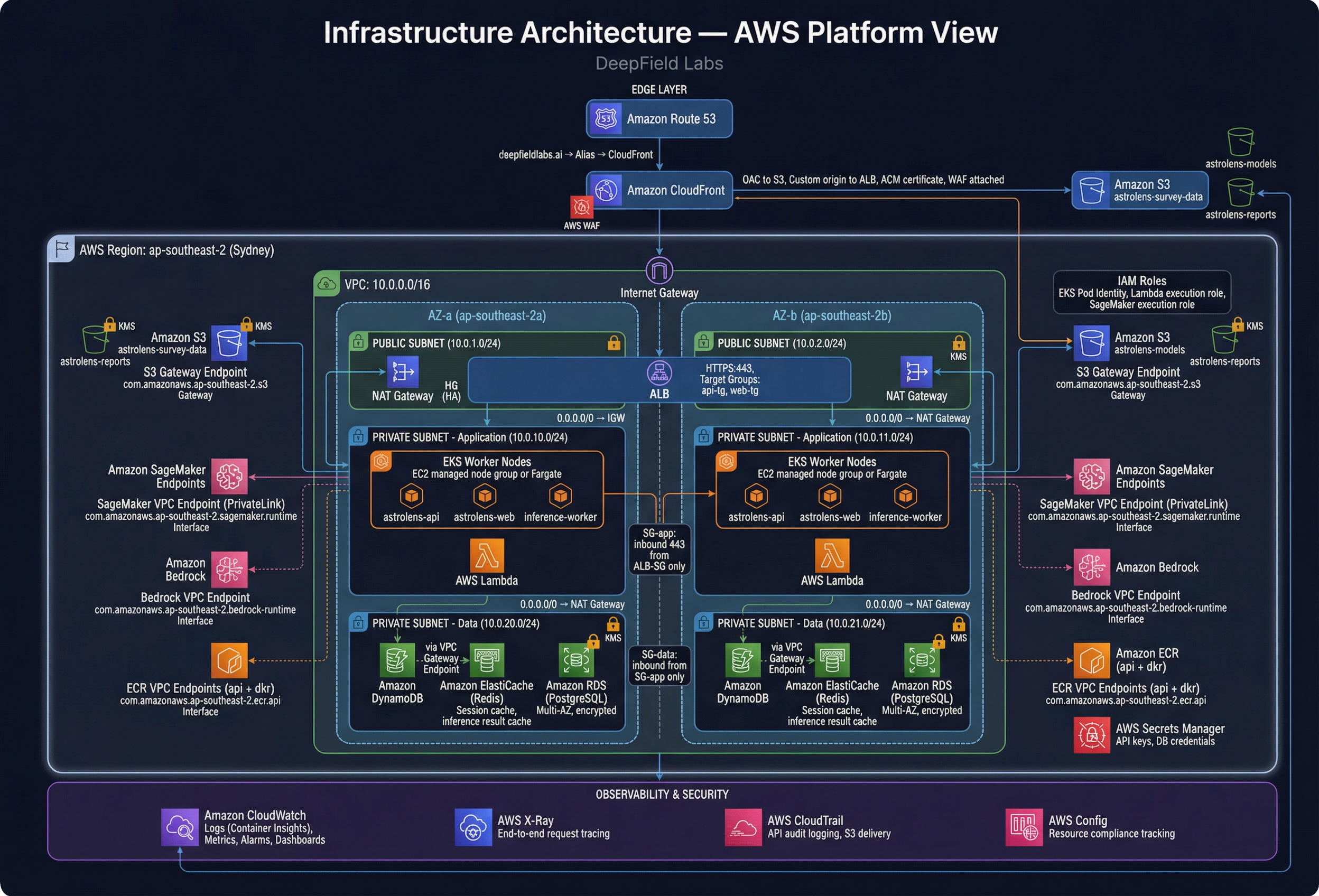

Saman is a specialist in AWS Cloud and DevOps with 10+ years of experience delivering cloud platform consultancy and production-grade solutions for large organisations. He designs and automates secure, scalable AWS architectures end-to-end—covering solution design, CI/CD, platform engineering, and technical leadership—with a focus on building reusable patterns that teams can ship, operate, and evolve with confidence.

Before specialising in cloud, Saman built a strong software engineering foundation across Linux embedded systems, monitoring applications, and cross-platform desktop development using Qt, including low-level networking and protocol implementation in C. That mix of deep engineering roots and modern AWS delivery means he brings practical judgement to every engagement—balancing speed, reliability, security, and cost without the hype.

AWS Solutions Architect

AWS Data Analytics

AWS Security

Cloud Architecture

DevOps & Platform Engineering

Technical Leadership

ML/AI Infrastructure

Python

Qt / Cross-Platform UI

Docker & Kubernetes

CI/CD Automation

Infrastructure as Code

Serverless & Microservices

Embedded Systems